Time to first tweet

At Twitter, one of our key metrics for success was 'time to first tweet', the time taken between someone navigating to twitter.com, and seeing the first Tweet in the page. I've always thought it a useful metric, as initial interaction speed is vitally important; take too long, and users lose focus and leave.

There seems to be a lot of fear, uncertainty and doubt (FUD) in the programming community around the speed of JS web apps, especially with the initial page load. Server-side generated HTML is making a come-back, indeed Twitter eventually moved back to that away from a stateful client. It seems the single-page-app approach has many vocal opponents.

I'm here to put some of that FUD to rest, and show you that it's perfectly possible to make JS web apps that load extremely fast. I'm going to show you some of the techniques I used to make monocle.io so fast.

First, a little disclaimer. As always, this approach may not be for everyone. If it turns out that server-side-rendering is best for you, then great - use it! Know your options, and use the best tool for the job.

Bottlenecks

In my experience there are four main bottlenecks to initial page loads. They are, in no specific order:

- Blocking IO - especially DB drivers

- Database - slow/many SQL queries

- Network - fetching assets of a TCP connection

- Client - browser processing speed

Blocking

By default Rails apps are single threaded, you're only going to get one request processed at a time. Even if you're not using Rails, or you turn on threading, network and DB calls can block the interpreter for everyone since threads are restricted by the GIL. [1]

MRI is restricted by the GIL, which prevents Ruby threads running concurrently. While modern DB drivers prevent the interpreter blocking on IO calls, the fact of the matter is that only one Ruby thread is performing at any one time.

If you go down the evented route, with EventMachine & Thin, you'll have to make sure that every single part of your application is EM aware, from the server to the database driver. Unfortunately this often requires re-writing large parts of your application. Many libraries and middleware either don't support EM, or require patching. You can see the problem manifest itself in the amount of gems that inject EM code into popular libraries; it's not pretty.

Processed based web servers, like Unicorn, are a fine choice. They're reliable and, as long as you have enouch processes, you'll mostly solve the IO/GIL blocking problem. The downside, of course, is that each process has a significant memory overhead.

There's a new threaded server on the block, Puma, that has some really promising benchmarks. I've been experimenting with running Monocle on Puma, instead of Unicorn, and getting some satisfying performance improvements. As soon as Rubinius is a bit more stable, I'll switch to that runtime and take advantage of real threads that aren't throttled by the GIL.

Database

If you take a look at monocle.io, you'll notice that we need to fetch a list of the most popular posts every page load. This needs to happen really fast, since the posts are loaded concurrently to the page request.

However, this wasn't happening as quick as I'd expected. I investigated further, and it turned out that every request to fetch the top 30 pages was running about 151 SQL queries. Specially this method was the culprit:

def as_json(options = nil)

user = (options || {})[:user]

{

id: id,

votes: votes,

voted: self.voted_users.include?(user),

created: self.user == user,

score: score,

title: title,

url: url,

comments_count: self.comments.count,

user_handle: self.user.handle,

created_at: created_at

}

end

Notice we're doing five extra SQL queries whenever we're serializing a Post to JSON. Clearly this is far from efficient, and we need to do a bit of data-denormalization.

I created columns on the posts table for voted_user_ids, comments_count and user_handle. I then made sure that data was kept up to date using Postgres triggers. You may want to do this at the ORM level (say with a ActiveRecord counter cache), but I'd rather leverage the DB to do this. Since this particular application (Monocle) is read-heavy, I'm not too worried if writes take a bit longer and data is duplicated.

By getting rid of all those extra queries in the JSON serialization, I was able to return a set of posts with only two SQL queries - one to find the currently logged in user, the second to fetch the top posts.

Network

Your user's network is the one part of the equation that is particularly hard to speed up. Google have been developing SPDY to try and improve some of TCP's pitfalls, and if your server supports then I'd recommend enabling it.

Ultimately the answer to improving the download time of pages is to try and send less over the network, reducing both the size and number of assets.

Minify JavaScript and CSS

Run your JavaScript through UglifyJS, and your CSS through YUI compressor.

The JavaScript compressor will actually rewrite as much of your JS as it can to save characters. Less data over the wire means faster web pages.

Concatenate JavaScript & CSS

Concatenate all your JavaScript & CSS into two files, application.js, and application.css. Less TCP handshakes and server requests often means assets load faster.

It's a good idea to append a checksum or mtime of the file onto the file's name. That way, whenever the file changes you'll get automatic cache invalidation.

If you have a lot of changing JavaScript, and a lot of stable libraries in your application, you may want to split out the two.

GZip

GZip JavaScript & CSS assets so they're compressed over the wire. You don't need to GZip most image types as they're already compressed.

If you're using Rack, you can get this by simply adding the following line. Be aware though that it can conflict with some of your other middleware, and breaks Sinatra's streaming.

use Rack::Deflater

It turns out that GZipping assets had the biggest impact out of all the tricks mentioned in this article on Monocle's load time.

Cache

Enable long lived caching for all your static assets. Set the expiry for about a year, as most clients don't support longer ones.

By setting Cache-Control to public you're instructing browsers to cache assets locally, and not to request them again until they expire.

Cache-Control: public, max-age=31557600

Date:Wed, 03 Jul 2013 15:09:55 GMT

Expires: Thu, 03 Jul 2014 21:09:55 GMT

Cache invalidation is simple since you're appending the mtime of assets to their names. To expire a cache, simply touch the file updating its mtime. You'll get this for free with Sprockets.

CDN

Host all your static assets on a CDN like Amazon's CloudFront. That'll ensure that assets are served as fast as possible, and as geographically close to the client as possible.

For Monocle I simply proxy assets through Cloudfront. If Cloudfront doesn't have them cached, there's a cache miss and Cloudfront requests the files from the server.

Cache invalidation is also incredibly simple: when the file changes, its name changes therefore causing a cache miss and invalidation.

Client

On the client, there are couple of trick to speed up the browser's rendering speed, mostly about hinting to the browser which resources it'll need to fetch.

Make sure the initial page is incredibly quick to load - don't do any SQL queries or complex server-side rendering. This will allow the browser to scan the page and figure out which remote resources like JavaScript and CSS it needs to download and parse as quickly as possible.

Add the defer attribute to all <script> tags. This will ensure the browser won't use the legacy 'document.write' compliant behavior, and script downloading won't block the rendering of the rest of the page.

<script defer src="/application.js"></script>

Be aware that a combination of defer and some libraries will break a few browser like IE <= 9.

Ensure that all scripts that don't need to be present on page load, such as analytics, are appended to the page dynamically.

$(window).on('load', function(){

$.getScript('http://some/analytics.js');

});

You'll notice in Monocle the loading of the 'Top Posts' is synchronous to the page load - you'll never see a flash of white unloaded content, or a loading spinner. I've achieved this by having a separate script, /setup.js, which is referenced in the initial page load. setup.js contains the top posts, and bootstraps the app with initial data.

Tools

I want to finish with a few page speed tools, that have been invaluable into speeding up monocle.io's loading time.

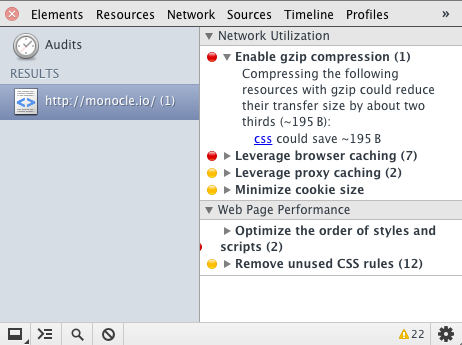

Use the audit tab in the Google Chrome Inspector to get an insight into some performance metrics, such as browser caching and gzip compression. It's worth running it in incognito mode, as some extensions can mess up the results by injecting their own assets. Also note that the Inspector's cache settings can also have an impact on results.

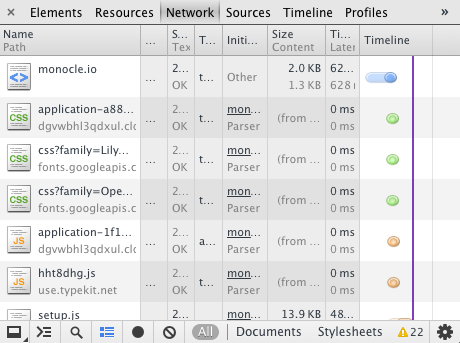

Chrome's network inspector is also invaluable for seeing what's affecting your page load times. You want to move those two blue and red lines (DOMContentLoaded & load) as close to the left as possible. Remove assets, and cache as much as you can before DOMContentLoaded is triggered.

Google have some really excellent articles on page speed, as well as an extremely useful tool called PageSpeed Insights that will analyze your web app and provide you with a bunch of tips on improving page rendering.

Fast is possible

Hopefully I've demonstrated here that It's perfectly possible to create extremely fast loading JavaScript web applications. Once the application's assets are on the client, the speed increases only continue. Rendering templates on the client is extremely fast, and you can provide a responsive and asynchronous UI to make interactions seem even faster.

[1] - It turns out that in my haste to dispel FUD, I've actually gone ahead and spread more. Modern Ruby DB adapters do not block the GIL, the interpreter is 'unlocked' during IO calls. Furthermore Rails 4 is threaded by default.